GDALR: An Efficient Model Duplication Attack on Black Box Machine Learning Models

Abstract

Trained Machine learning models are core components of proprietary products. Business models are entirely built around these ML powered products. Such products are either delivered as a software package (containing the trained model) or they are deployed on cloud with restricted API access for prediction. In ML-as-a-service, users are charged per-query or per-hour basis, generating revenue for businesses. Models deployed on cloud could be vulnerable to Model Duplication attacks. Researchers found ways to exploit these services and clone the functionalities of black box models hidden in the cloud by continuously querying the provided APIs. After successful execution of attack, the attacker does not require to pay the cloud service provider. Worst case scenario, attackers can also sell the cloned model or use them in their business model.

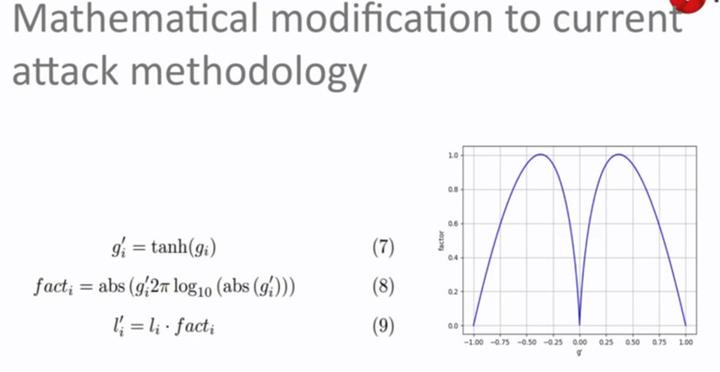

Traditionally attackers use convex optimization algorithm like Gradient Descent with appropriate hyper-parameters to train their models. In our research we propose a modification to traditional approach called as GDALR (Gradient Driven Adaptive Learning Rate) that dynamically updates the learning rate based on the gradient values. This results in stealing the target model in comparatively less number of epochs, decreasing the time and cost, hence increasing the efficiency of the attack. This shows that sophisticated attacks can be launched for stealing the black box machine learning models which increases risk for MLaaS based businesses